-

Products

+

-

Products

- New Products

- AV over IP Solutions

- Unified Communication & Collaboration Solutions

- Digital Video Processing Solutions

- Control Systems & Software

- Matrix Switchers

- HDMI Switchers

- HDMI Distribution Amplifiers

- Wireless AV Solutions

- HDMI over CAT6 Extenders

- HDMI Fixers & Boosters

- HDMI Cables

- Active Optical HDMI Fiber Cables

- Audio Solutions

- Accessories

-

Key Digital AV Over IP Systems

- AV Over IP Systems Overview

- AV Over IP Products

- DIY AV Over IP Systems

- Control Apps and Software

-

-

Markets & Solutions

+

- KD University

-

Resources

+

-

Press Resources

- Press Releases

- Key Digital in the News

- Hires Artwork

-

Sales Resources

- Market Case Studies

- Video Resources

- Sales & Tech Presentations

-

- About Us +

- Contact

High Dynamic Range (HDR) technology introduced by HDMI 2.0a for ProAV

By Mike Tsinberg - August 23, 2016

HDR addresses current TV limitation - clipping of high brightness scenes on high contrast displays. As you see from the pictures examples below, the current Standard Dynamic Range system does not allow a Higher Dynamic Range that would adequately expose both dark and extremely bright areas at the same time. These three pictures are courtesy of Joe Kane videoessentials.com.

An underexposed level where dark areas are not well lit:

Middle-level exposure level where dark areas have more definition but bright areas are clipping:

Overexposure where dark areas are too bright and bright areas are gone:

TV Camera capture and production processing have to take this into account constantly adjusting exposure to capture the most significant current scene parts of the Dynamic Range to create the best possible display on current TVs that use Standard Dynamic Range (SDR) parameters.

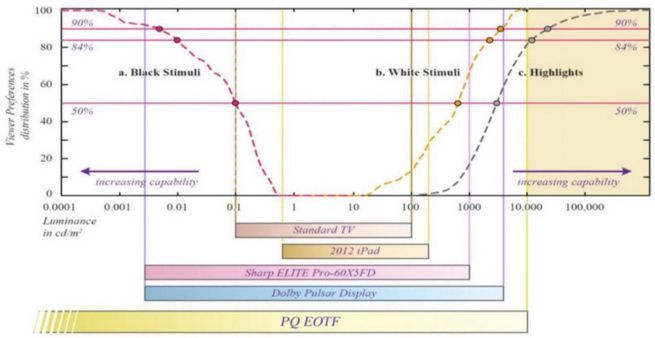

The chart developed by Dolby (2) below shows comparisons between dark/bright human perceptions versus the quality of various displays.

As you see most of the Standard TV's occupy rather narrow region between 0.1 cd/m2 and 100 cd/m2 –SDR with contract ratio of 1000. Future display technology may increase the contrast ratio, display better dark and bright scenes potentially offering true HDR capability. However, this research does not take into account ambient light in home and the fact that we rarely watch TV in a total darkness. Mark Schubin commented: "It could well be the case that a good home TV puts out a black of 0.1 nits. But, if the screen is 2% reflective, and there's 50 lux illumination on it from ambient light, then there is an ambient reflection, even with the TV set off, of 1 nit. That lowers the contrast ratio for a 100-nit screen to 100:1. Now, suppose someone is watching a 1000-nit HDR TV in a pitch-black room. The 1000-nit output is bouncing off the viewer's face at roughly 15% reflectance (150 nits) and is then bouncing off the screen, again, at 2%. So maybe even that "pitch-black" viewing condition with an HDR screen is yielding a contrast ratio of only 300:1 or so". It is going to be VERY DIFFICULT to create a total darkness at home to get full benefits of the HDR.

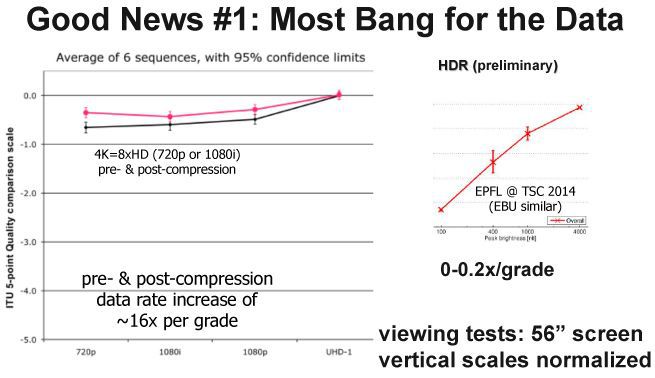

Research into TV quality improvements indicate that introduction of the HDR may have a larger impact on the quality of TV viewing then resolution change from 1080p to 4K. Below are two charts comparing perceived quality improvements transitioning from a current HDTV (1080p) to 4KUHD and transitioning from the SDR to HDR, courtesy of Mark Schubin.

As you see from these charts above the perceived TV quality improvement for SDR to HDR transition as 100 nits to 1000 nits peak brightness is 1 point on the ITU (International Telecommunication Union) quality scale. That is double compare to 1080p to 4K transition that is only in 0.5 point on the ITU scale. Check out Mark Schubin lectures (1).

It is important to notice that most of the HDR research is done using 8 bit depth video at various resolutions: 720p, 1080i, 1080p and 4K. The HDR standard also remains flexible to the resolution and bit depth. HDR can be used with ANY resolution and ANY bit depth. In recent marketing it is often referred to HDR8 as it pertain to 8 bit video and HDR10 as it pertain to 10 bit video. The perceived picture quality difference between 8 bit video and 10 bit video is very small. It is much less than a difference between 1080p and 4K video. Subsequently the picture quality difference between HDR8 and HDR10 is even less significant. The consumer TV displays can also differ between 8 bit and 10 bit depth. It is not reflected on the display OSD where generic word HDR is used. It is only reflected in the EDID file that is transmitted from the display to the source.

All the links of the Television capture-production-distribution-display chain need to be addressed to deliver HDR and compatible SDR. Cameras should be designed to allow HDR. Production should be able to adequately process HDR and create compatible SDR format at the same time. If HDR introduction happen, it certainly will be very gradual due to the tremendous amount of legacy SDR TV's. Therefore, SDR and HDR TV's will coexist for a very long time. The SDR + HDR format should be broadcasted and streamed to both SDR and HDR displays simultaneously. Both SDR and HDR displays have to create a fare representation of the original scene taking maximum advantage of incoming format that is pre-managed in studio and production. The compatibility to SDR creates a lot of challenges for production, and display. Production has to create two versions of the same content in the HDR and SDR. Broadcasting and streaming has to deliver both to corresponding displays at the same time. It is very likely that with many SDR TV displays at home now we will see a mix of the HDR and SDR displays playing back the same content for a very long time. It is also obvious that nobody will waste 2X broadcasted data bandwidth for the SDR + HDR distribution.

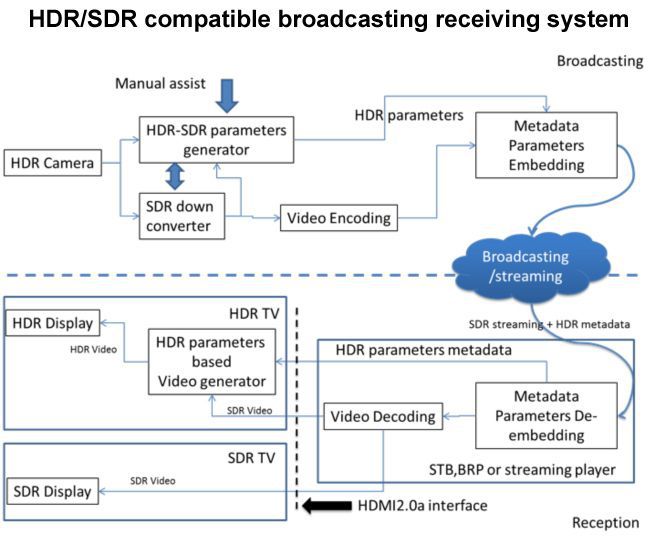

One of the possible methods for the HDR + SDR compatibility is to capture original content in the HDR but create a corresponding SDR version in the studio or production with accompanying HDR conversion parameters. The HDR parameters will be included in the broadcasted/streamed metadata as it shown in picture below. In this method the SDR version is derived from the HDR version in the studio or production with auto or/and manually assisted tools. Special equipment will derive the HDR metadata that can be used in the HDR capable TV's to recreate original HDR image. Such HDR metadata will be ignored in the SDR TV's. In some HDR/SDR metadata proposals such as Philips (2) metadata requirement is only 35 bytes per scene. Let's calculate payload requirement of HDR metadata on baseband video such as HDMI. If we assume that scene is changing every frame (most conservative but unlikely) and baseband (HDMI) video payload for 1080p, 8 bit, 4:2:0 frame is 3Mbytes/Frame, the 35 Bytes/Frame metadata requires only 0.001% of bit rate increase – 35/3,000,000. So HDR metadata impact in HDMI data rate will be extremely small.

In the method shown above the HDR Camera signal is processed by the HDR/SDR parameters generator that creates a set of metadata to assist HDR TV to re-create the HDR from SDR and such metadata. The same signal is processed by the HDR to SDR down converter that derives compatible SDR video. The SDR video is compressed and HDR metadata is added for the transmission. In the HDR TV this HDR metadata is de-embedded from the compressed video stream and included into HDMI 2.0a interface (6). The HDR TV will decode the SDR video and will use this HDR metadata to re-create a fare HDR representation. The SDR TV with HDMI 1.X-2.0 interface will ignore the HDR metadata and will process the SDR signal as usual.

HDR is not directly connected to Luminance or Chrominance resolution or bit accuracy. SNR (Signal to Noise Ratio) is the most important issue in this regard and is not dealt adequately in TV industry yet. HDR can be used on any resolution/color subsampling system. The timing of HDR HDMI 2.0a proposal is not connected with resolution increases offered by HDMI 2.0. It's simply a good time to introduce the HDR metadata capability for the future camera/production/distribution/display improvements.

HDR can be used with 480i, 1080p as well as 4KUHD screens. Bit accuracy can be 8bit/pix to 16bit/pix. Color resolution can range from 4:2:0 to 4:4:4. HDMI2.0a does not address what specific HDR formats to be used nor does it address how capture, process, broadcast and display will deal with the HDR and HDR/SDR compatibility. It is simply opens a metadata capability for future use with the HDR.

Current de-facto broadcasting format of 4:2:0/8 bit for 1080p or 4K resolutions will pass HDR metadata that is derived for these formats.

Current competitors for the HDR systems are: Dolby Vision(2), BBC/NHK(4), Technicolor/RCA(5) and Phillips(3). So far there is no de-facto or formal standard surfaced.

HDR has nothing to do with resolution, color spacing and bit accuracy. HDMI 2.0a (6) made a place holder in the HDMI standard in a form of a metadata pocket that can be used by the HDR TV receiver. It is similar to what was introduced in HDMI 1.3 as xvYCC color space plus the need to revamp all aspects of TV chain. As we know there is no widespread use of xvYCC despite only requirements to change Camera and Display. HDR requires a very different display technology and a very specific lightning control at home that is not invented yet. As far as format it can ride on ANY resolution 1080p or 4K including 4:2:0/8bit format.

For all the technological reasons including complete revamping of the total broadcasting, distribution and reception TV chain it seems that HDR may only have a chance to be implemented in a very distant future if ever.

References:

(1) Mark Schubin Lectures:

Introduction: https://www.youtube.com/watch?v=fgf3hDqUB04

HFR : https://www.youtube.com/watch?v=iHWsW6PmHRw

higher-rez: https://www.youtube.com/watch?v=S0BmVbfNQT4

HDR: https://www.youtube.com/watch?v=qYTdVrv2N1Y

(2) http://www.dolby.com/us/en/technologies/dolby-vision/dolby-vision-white-paper.pdf

(3) http://www.ip.philips.com/data/downloadables/1/9/7/9/philips_hdr_white_paper.pdf

(4) http://www.arib.or.jp/english/html/overview/doc/2-STD-B67v1_0.pdf